The End of the Train-Test Split

2015: Loosely based on a true story.

You are a machine learning engineer at Facebook in Menlo Park. Your task: build the best butt classification model, which decides if there is an exposed butt in an image.

The content policy team in D.C. has written country-specific censorship rules based on cultural tolerance for gluteal cleft—or butt crack, for the uninitiated.

- Germany: 0% cleft.

- Zimbabwe: 30% cleft.

- Cupertino: 0%.

- Montana: 20%.

A PM on your team writes data labeling guidelines for a business process outsourcing firm (BPO), and each example in your dataset is triple-reviewed by the firm's outsourced team to ensure consistency. You skim the labels, which seem reasonable.

import torch

import pandas as pd

from torch.utils.data import DataLoader, TensorDataset

from sklearn.model_selection import train_test_split

df = pd.read_csv("gluteal_cleft_labels.csv")

X = df.drop("label", axis=1).values

y = df["label"].values

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)You decide to train a CNN: it'll be perfect for this edge detection task. Two months later, you've cracked it. Your model goes live, great success, 92% precision, 98% recall. You never once had to talk to the policy team in D.C.

2023: The butt model has been in production for 8 years.

Another email: Policy has heard about LLMs and thinks it's time to build a more "context-aware" model. They would like the model to understand whether there is sexually suggestive posing, sexual context, or artistic intent in the image.

You receive a 10 page policy doc. The PM cleans it up a bit and sends it to the BPO. The data is triple reviewed, you skim the labels, and they seem fine.

You make an LLM decision tree, one LLM call per policy section, and aggregate the results. Two months pass. You are stuck at 85% precision and recall, no matter how much prompt engineering and model tuning you do. You try going back to a CNN, training it on the labels. It scores 83%.

Your data science spidey-sense tingles. Something is wrong with the labels or the policy.

You email the policy team, sending them the dataset and results.

The East Coast Metamates say they looked at 20 of the labels. 60% were good, 20% were wrong, 20% were edge cases they hadn’t thought of.

The butt model lives to fight another day, or at least until these discrepancies get sorted out.

2025.

The butt model is still in production… What went wrong?

The train-test split does not work for classification tasks at the frontier of LLM capability.

It doesn't matter if the task is content policy, sales chatbots, legal chatbots, or AI automation in any other industry.

Test Split: Any task complicated enough to require an LLM will need policy expert labels.[1] To detect nudity, outsourced labels will do, but to enforce a 10 page adult policy, you need the expert. Experts have little time: they can't give you enough labels to run your high scale training runs. You can't isolate enough data for a test set without killing your training set size. On top of that, a 10 page policy comes with so much gray area that you can’t debug test set mistakes without looking at test set results and model explanations.

Train Split: You no longer need a large, discrete training set because LLMs often don't need to be trained on data: they need to be given clear rules in natural language, and maybe 5-10 good examples.[2] Today, accuracy improvements come from an engineer better understanding the classification rules and better explaining them to the model, not from tuning hyperparameters or trying novel RL algorithms. Is a discrepancy between the LLM result and the ground truth due to the LLM’s mistake, the labeler’s mistake, or an ambiguous policy line? This requires unprecedented levels of communication between policy and engineering teams.

Recommending a New Split: Don’t train the LLM on any of the dataset. Address the inevitable biases with evaluation on blind, unlabeled data.[3] To understand why this is the best approach, we need to dive deeper into why the train-test split paradigm doesn't suffice.

Policy or operations leader? There's a version of this article for you: The End of the Eng-Ops Split

Core problems with complex classification tasks

Policy Experts write abstract rules. For older classification tasks, the rules had to be simple, because simple was all that models could do. Are there guns in the image? Is there a minor in the image? Complicated tasks are harder to pin down.

- What is hate speech?

- What is sexually suggestive?

- What makes false advertising cross from overdone to illegal?

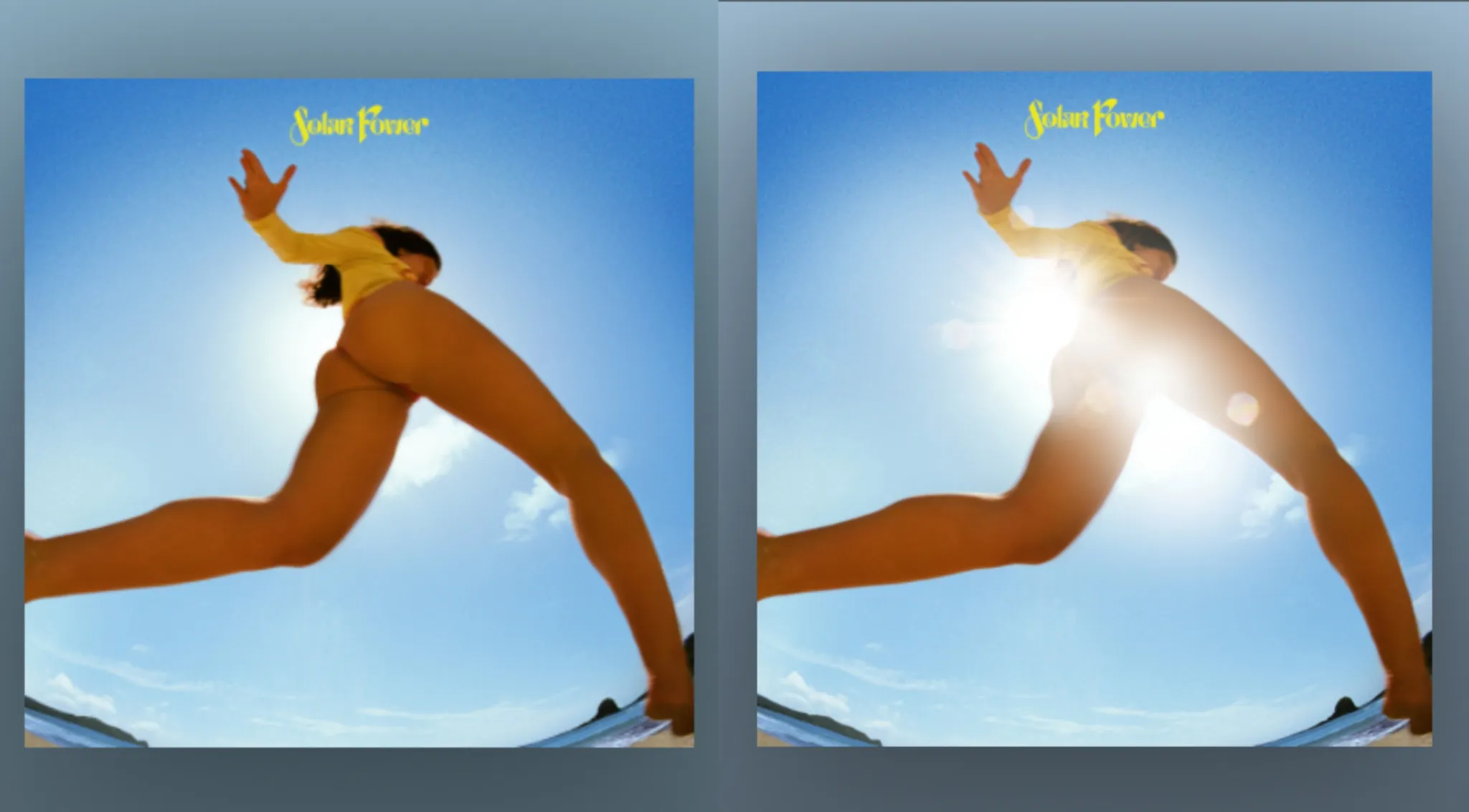

Take these examples. Sexually suggestive? Artistic expression? Sufficient censorship?

Source: Lorde's album covers from Vice.com, where you can find discussion of what is visible or not in each photo.

Most policy documents are not well-kept. A content policy is typically simplified, operationalized, and sent to outsourced teams to enforce. Edge cases and discrepancies found in India never make it back to policy teams in DC, so abstract rules are rarely pinned down.

In production, we see 15-20% false positive rates on BPOs. Half are attributable to human error, half to policy gray area.

To resolve edge cases, labeling tasks require an expert's time. BPO agents can eyeball how much butt is visible, but struggle with what is "sexually suggestive." BPOs are low-wage workers in countries like India or the Phillipines, and may have different definitions of "sexual context" than the policy writers intended. The costs of training them are often prohibitive at scale.

Using in-house agents is not sufficient for training data, either, as small alignment issues in the dataset cause large issues in production: If internal agents are 95% accurate (pretty good), the ceiling for the LLM's performance is 95%. If the LLM gets 95% of those labels right, its accuracy will be 90%.

Hard classification tasks have high rates of expert disagreement. Ask two people if there's a gun in an image, odds are they'll agree. Ask two policy experts whether a pose is sexually suggestive per their definition, you will start a one hour debate. If two experts only agree 95% of the time, then hand off to internal agents for labeling at 95% accuracy, then the LLM is 95% accurate, you are down to 86% LLM accuracy.

Language models see details in data that even experts miss. LLMs read every word of the product description. They scrutinize every image for a single pixel of gluteal cleft. They see gluteal cleft through sheer clothing, in low opacity, and in the bottom left corner of the back of a t-shirt. Even if experts have reviewed the data, there must be a re-review and feedback loop to check what the LLM has flagged.

Since labels are often wrong or ambiguous, you cannot keep the test set blind. If the LLM is right and the labels are wrong, either because the expert missed something, the policy was ambiguous, or the outsourced labeler was wrong, you have to look at the test set results to check. Moreover, you need to review the LLM's explanation while reviewing the new data to see what it found, confounding any true "blind" test.[4]

In production, we see anywhere from 15-30% error rates in data considered "golden sets" by operations teams.

Given the need for expert input and policy clarifications, you cannot maintain large enough training sets to keep the test set blind. For a simple policy, maintaining good labeled data is straightforward. However, attempting to integrate an LLM is often the first time a policy expert will be asked to scrutinize their rules and labels. Their time is valuable, and they will not be able to bring their expertise to a large dataset.

Complicated policies change, especially under scrutiny, and re-labeling affected examples is time-consuming. A leaner training set will be more valuable than a larger one in the long run.

Since these classification tasks are so complex, you can only "debug" the model by looking at the input and its explanations side-by-side. A policy might have dozens of rules and thousands of possible inputs, creating a fat-tail of model mistakes. Unlike traditional machine learning, where you fix mistakes by changing the design or hyperparameters of your model, you fix LLM mistakes by changing the prompt. You can directly fix a mistake (e.g. by telling the model "do not consider spreading legs fully clothed to be sexually suggestive"), so keeping mistakes hidden only hurts accuracy.[5]

You still need to run blind tests: QA the models on new data. Organizations end up running their models in "shadow mode" on production data, creating test examples without taking real-world actions. Here, you'll likely need an in-house agent to review the examples, then forward edge cases to a policy expert.

The Policy and Engineering Teams Need to be in Direct, Frequent Communication. The SF-DC split doesn't work anymore. Resolving edge cases and, in many cases, changing the policy to reflect patterns identified in the data requires collaboration.

Experts have historically not needed to look at the data—seen as a low-status task—but it is the only way to achieve high accuracy. This is an unsolved problem in many large organizations that blocks LLM integrations.

Most importantly, LLMs do not "train" on data the way traditional classifiers do, so there is often no need to have a "training" set, either. LLMs can enforce complicated, natural language rules because they can take natural language inputs, not because they can learn patterns from thousands of training examples. LLM accuracy is often a prompting task, not a design-your-RL-pipeline task.[1]

If you want a model to classify whether animals are endangered species, don't give it 1,000 examples of elephant ivory, 100 examples of every species on the CITES list, and 1,000 pictures of your non-endangered dog, give it the list of species names as inputs.

The "training" step for language models has to be policy alignment, not heating up GPUs. Since the data will always be flawed and the test set won't be blind, the machine learning engineer's priority should be spent working with policy teams to improve the data. That means surfacing edge cases and policy gray areas, clarifying policy definitions, and leveraging LLM outputs to find more discrepancies until data is high-quality and policy is clear.

In production, this is an ongoing process, as LLMs will always surface new interesting cases and policies will continue to change. Policies and enforcement are better for this feedback loop: it enables consistent, scaled enforcement platform-wide.

Today and Tomorrow

This is a paradigm shift that many machine learning teams, and enterprises as a whole, have not yet embraced. It requires a complete change in how we approach classification tasks.

If this is the road to automation, is it even worthwhile? The process described above, while arduous, is the shortest route to consistent policy enforcement to date. Before LLMs, running a successful quality assurance program would be prohibitively expensive. Retraining human agents takes far longer than retraining LLMs. Policy experts have historically never been owners in quality assurance processes, but now can be.

To save a little time, an in-house human agent might do a first review of the results, then a policy expert can review only the discrepancies. We find this tradeoff works well in production.

What are the implications for leveraging LLMs for tasks which do not have binary classifications? Can an LLM be a lawyer if this much work is required to align, evaluate, and test models? Will an LLM ever ~know what you mean~ and skip all these alignment steps?

One core problem with the LLM architecture is that the model doesn't know when it is wrong. Model improvements over the past few years mean the LLM is right more often, but when it is wrong, it doesn't have an outlet.

This is a perennial machine learning problem: a model does not know what is "out of distribution" for itself.

Until that problem is solved, there will have to be an engineer in the loop improving and testing the model, and a policy expert evaluating the results. You can do this for complicated tasks like writing a patent application, but you have to be rigorous, define a rubric, curate expert data, and regularly evaluate model outputs. Calculating accuracy of each "training run" will never be as easy as checking if model_output == ground_truth, and will require a human in the loop. These complex tasks are far more lucrative than binary classification, and smart people are working on them.

Not everybody will take this rigorous approach, and as models improve, they might not have to. Until then, the highest leverage way to spend your time in 2026 will be looking closely at your data, cleaning your data, and labeling your data.

Get the latest in Risk, Trust & Safety, and Applied AI

Expect ~1 email every 2 weeks, unsubscribe any time.